Real-time Display of Structures Extracted from 3D Data Sets

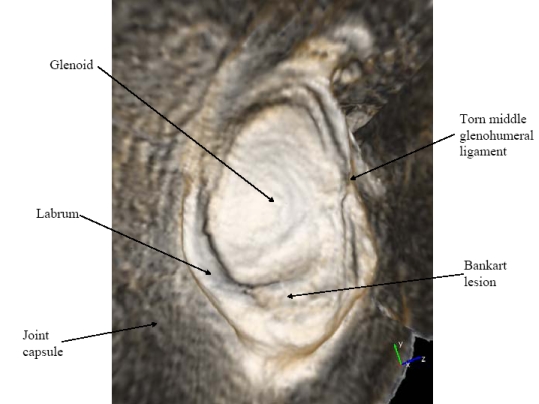

The goal of this work is to enable more rapid and accurate diagnosis of pathology from three dimensional (3D) medical images by augmenting standard volume rendering techniques to display otherwise-occluded features within the volume. Viewing 3D reconstructions of objects from magnetic resonance imaging (MRI) and computed tomography (CT) data is more intuitive than mentally reconstructing these objects from orthogonal slices of the data because the human visual system understands 3D shape through perception of surfaces (Gibson 1979; Ware 2004). Viewing slices of medical images becomes increasingly problematic as data set sizes increase due to ever-improving scanner resolutions. When displaying such data sets with volume rendering, appropriate selection of the transfer function is critical for determining which features of the data will be displayed. It is often the case that, due to occlusion, no transfer function is able to produce the most useful views for diagnosis of pathology. To address this problem, CISMM personnel developed Flexible Occlusion Rendering (FOR) which allows radiologists to view structures as if the intervening surfaces had been peeled away.

FOR has been applied to three different applications: virtual arthroscopy of joints from MRI data (Borland 2005; Borland 2006), virtual ureteroscopy of the renal collecting system from contrast-enhanced CT data (Fielding 2006), and knee fracture visualization from CT data. In all three cases, FOR enables useful views of the data that are not possible using standard volume rendering.

The key insights and features in FOR are: (1) Finding separating features between objects (rather than boundaries of the objects themselves) enables more robust determination of undesired occlusions. (2) The location of these separating features in data sets that have noise and partial-volume effects can be performed robustly by finding intensity peaks using hysteresis. (3) These features can be found during the rendering process, without a need for segmentation. (4) These features can be found using the same dataset used for rendering, or a separate dataset optimized for occlusion determination. (5) Finding the separation enables seamless transition from occluded viewpoints to occluding viewpoints. (6) The scale of the dataset used for feature-detection can be adjusted without altering the resolution of the data being rendered. (Borland 2007) These insights have been submitted as an international patent application (Borland 2006) and have been licensed by Siemens for use in their Syngo software package.

Collaborator

John Clarke, M.D., UNC Radiology.

Funding: UNC Radiology, Siemens.