Video Spot Tracker Manual

The Video Spot Tracker program is used to track the motion of one or more spots (particles) in an FFMPEG-compatible video file, from a Microsoft DirectShow-compatible camera, from a Roper Scientific camera, from a DiagInc SPOT camera, from a camera attached to an EDT video capture board (we use a Pulnix camera this way at UNC), or from a set of TIFF, PPM, PGM, BMP files (it can read other, compressed file formats that can be read by ImageMagick but these are not included in the default set because these file formats distort images in ways that affect tracking accuracy). It can also read raw, uncompressed 8-bit video files.

Downloading and installing the program

An installer for the program and manual can be downloaded from the CISMM software download page. It requires Microsoft Direct X version 9.0a or higher.

To convert the VRPN output files to Matlab format requires the VRPNLogToMatlab program, also available from the CISMM download page. These packages can be installed in any order.

Cost of Use

The development, dissemination, and support of this program is paid for by the National Institutes of Health National Institute for Biomedical Imaging and Bioengineering through its National Research Resource in Computer-Integrated Systems for Microscopy and Manipulation at the University of North Carolina at Chapel Hill. This program is distributed without charge, subject to the following terms:

- Acknowledgement in any publication, written, videotaped, or otherwise produced, that results from using this program. The acknowledgement should credit: CISMM at UNC-CH, supported by the NIH NIBIB (NIH 5-P41-RR02170).

- Furnish a reference to (and preferably a copy of) any publication, including videotape, that you produce and disseminate outside your group using our program. These should be addressed to: taylorr@cs.unc.edu; Russell M. Taylor II, Department of Computer Science, Sitterson Hall,University of North Carolina at Chapel Hill, Chapel Hill, NC 27599-3175.

Thank-You Ware: Rather than making you register to download our software or join a mailing list or provide other personally-identifying material to use our code, we’ve come up with the following easy and anonymous way for you to let us know we’re helping the community. When you press the “Say Thank You!” button to let us know you appreciate having the tool, the application sends a web query to our server and logs your response (not your name or your phone number, just a web hit count). We add up these counts and report them to the reviewers for our renewal applications when we ask NIBIB to continue funding us to maintain our existing tools and to build new ones. Please press the button whenever you feel like the tool has been helpful to you.

Running the program

The program is run by dragging a video file or image file onto the desktop icon that was created when the program was installed. To select a stack of image files that are numbered consecutively, drag any one of the files onto the icon. It can also be run by selecting one of the camera-specific shortcuts from Start Menu/All Programs/NSRG/Video Spot Tracker. If you run the program directly by double-clicking on the icon, it will ask for a video or image file (AVI, TIF, or BMP) that it should open.

The program is run by dragging a video file or image file onto the desktop icon that was created when the program was installed. To select a stack of image files that are numbered consecutively, drag any one of the files onto the icon. It can also be run by selecting one of the camera-specific shortcuts from Start Menu/All Programs/NSRG/Video Spot Tracker. If you run the program directly by double-clicking on the icon, it will ask for a video or image file (AVI, TIF, or BMP) that it should open.

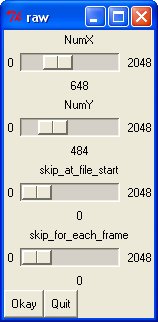

If you open a raw video file, the program will try to guess the frame size by seeing if it matches a 648×484 image (some Pulnix cameras) or a 1024×768 + 112-byte per-frame header (some Point Grey cameras). If neither of these seem to work, then it will bring up a dialog box like the one shown to the left to ask you about the file format so that it can read it. The NumX and NumY parameters specify the image size, the skip_at_file_start parameter tells how many bytes to skip at the beginning of the file (skipping a file header). The skip_for_each_frame value tells how many bytes to skip at the beginning of each frame in the video, to skip any per-frame header information. The raw file must be single-channel, 8-bit values to be properly interpreted. If you put incorrect values, the images will appear to be scrambled, to slant, and to jump around between frames.

NOTE: With Windows XP service pack 2 and with other firewall software, running the program causes a dialog box to appear asking whether to block the program; the program needs to be unblocked only if the program is to be connected to a local or remote feedback system that relies on the tracking data. This happens when the program opens the Internet port assigned to it (3883) by the Internet Domain Naming Authority to export the data through the standard VRPN protocol (www.vrpn.org).

Using the program

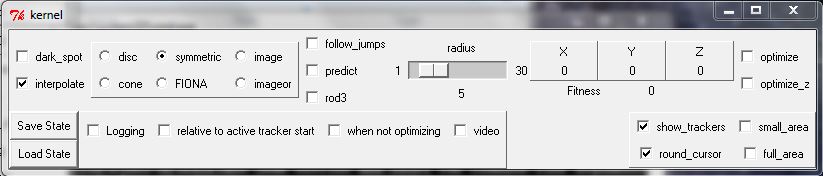

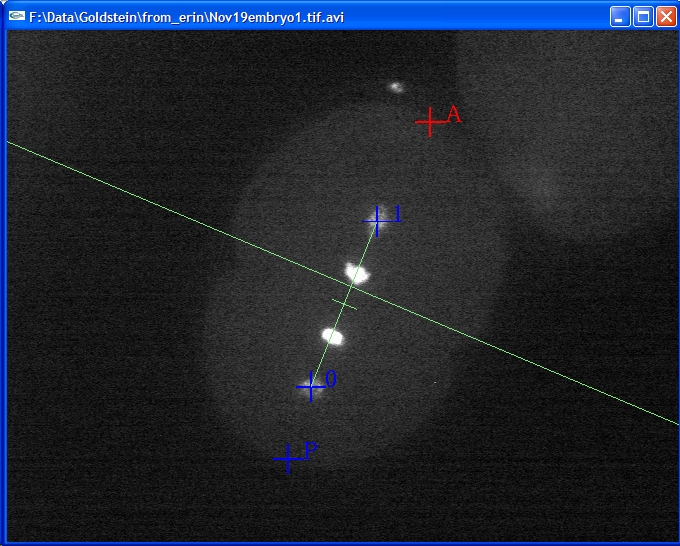

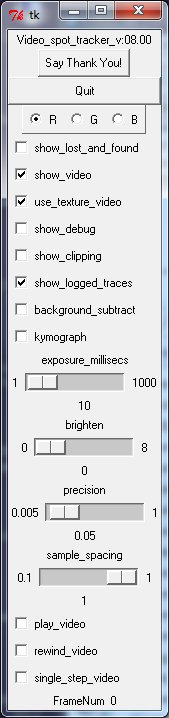

When the program is run and a file is selected, four windows will appear. The image window that appears on the lower right contains the first image within the video file or a continually-updating image from a live camera feed. The control panel named tk that appears on the left when the program is run (shown here to the right) contains a number of interaction widgets that control the operation of the program. The kernel control panel that appears above the image window (described later) controls the type of tracking kernel being used. The log control panel is described later.

When the program is run and a file is selected, four windows will appear. The image window that appears on the lower right contains the first image within the video file or a continually-updating image from a live camera feed. The control panel named tk that appears on the left when the program is run (shown here to the right) contains a number of interaction widgets that control the operation of the program. The kernel control panel that appears above the image window (described later) controls the type of tracking kernel being used. The log control panel is described later.

Selecting the color channel to use

If the video file (or video camera) contains different data sets in the red, green, and blue channels then you will need to select the one you want

to view. By default, the red channel R is selected. To select a different channel, click on G for green or B for blue. The video window should change to display the selected channel.

Making images display brighter

There is a brighten slider that will let you increase the displayed intensity of images that don’t use their whole range (if the integration time on th

e camera was very low, for example). Sliding it to the right will make images that are too dark visible so you can see where to put the tracker. Setting it too high will cause a white image (when use_opengl_video is set) or a wrap-around strange-looking image (when it is not). This setting has no effect on tracking, only on the images shown on the screen.

Selecting spots to track

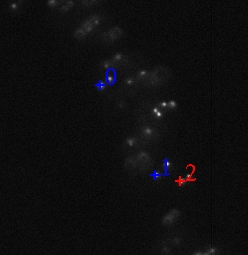

To select the first spot to track, click on its center with the left mouse button. A red plus sign will appear at the location you have selected. If you want to adjust its radius, you can hold the mouse button down after you have clicked and pull the mouse away from the center; pretty soon, the radius of the disk will track the distance you have pulled the mouse from the center. You can also adjust the radius by clicking on the number beneath the radius slider within the kernel control panel and entering it into the dialog box that appears or by moving the radius slider.

When a new tracker is created it turns red to indicate that it is the active tracker; all other trackers turn blue. To create more trackers, click on the center of each new spot with the left mouse button. The default radius of each new spot tracker will match the radius of the spot that was active when the new one is created. Each tracked spot will be labeled with an index that matches its index in the stored tracking file.

When a new tracker is created it turns red to indicate that it is the active tracker; all other trackers turn blue. To create more trackers, click on the center of each new spot with the left mouse button. The default radius of each new spot tracker will match the radius of the spot that was active when the new one is created. Each tracked spot will be labeled with an index that matches its index in the stored tracking file.

If you click with the right mouse button, the closest tracker is moved to the location you picked and it becomes the active tracker (in red). The active tracker’s position and radius are shown in the user interface, and they can be edited by clicking on the number beneath the relevant slider. By selecting different trackers using the right mouse button, you can adjust the position of each tracker one at a time.

You can drag a tracker to a new location by clicking the right mouse button on top of it and then holding the button down to drag the tracker to a new location. Note that when optimization is turned on, the tracker will always jump to the optimum location nearest the current location of the mouse cursor.

You can delete an unwanted tracker by making it the active tracker (click on it with the right mouse button) and then pressing the delete or backspace key on the keyboard. In version 3.02 and higher, indices are not repeated even after trackers are deleted.

If you prefer to not have the centers of the tracked objects obscured by the tracking markers, select the round_cursor checkbox from the tk control panel. This will replace the plus signs with a circle around the center at twice the radius that the kernel is set to use.

Selecting the type of tracker and tracking/logging parameters

There are four types of tracking kernels available: disc, cone, symmetric, and FIONA. Their properties are controlled using interface widgets in the kernel control panel.

For tracking spots that are even in intensity, or which have uneven intensities within them but a defined circular edge, the disc kernel should be used. The radius should be set to match the radius of the spots you wish to track. The interpolate checkbox should be set for more accuracy and cleared for more speed.

For tracking spots that are brighter in the center and drop off to dim (or darkest in the center and ramp up to bright), the cone kernel should be used. For cone tracking, the radius should be set about 1/3 larger than the spots you want to track (giving the kernel a good sampling of the background as well as the spot). The setting of the interpolate check box does not matter for the cone tracker (this tracker always interpolates).

A parameter relevant for both the disc and cone kernel is whether the spots are dark points in a lighter background (the default) or lighter points in a dark background). This is controlled using the dark_spot check box located at the top of the user interface. You should set this for the type of spot you are seeking.

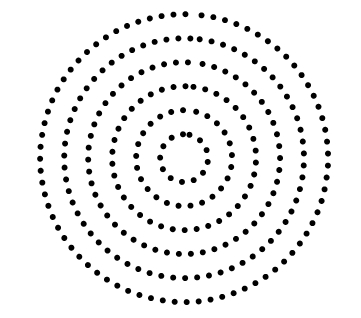

If the bead profile is changing over time, or if it does not fit well into one of the above categories, then the symmetric tracker should be chosen. This tracker operates by locating the minimum variance in concentric rings around the bead center. It sums the variance in circles of radius 1, 2, 3, … up to the radius setting and divides each circle’s radius by its circumference to provide even weights for each ring. The radius should be set to be at least slighly larger than the bead that is to be tracked; setting it larger will not harm tracking except to make the program run more slowly. The setting on the dark_spot check box has no effect when symmetric tracker is chosen.

For fluorescent emitters that are much smaller than a pixel, use the FIONA kernel. This is based on code from Paul Selvin’s laboratory for implementing “Fluorescence Imaging with One Nanometer Accuracy.” The technique is described in “Molecular motors one at a time: FIONA to the rescue” by Comert Kural, Hazma Balci and Paul Selvin from UIUC. It finds the least-squares fit 2D Gaussian to the area around the point, to approximate the effect of integrating an Airy pattern centered at the emitter. The technique implemented here differs from the UIUC implementation in two ways. First, it assumes a symmetric Gaussian (this assumes square pixels). Second, it only fits out to four standard deviations from the center (this prevents somewhat distant spots from interfering with the optimization). Even with this reduced radius, two points come near each other, they will interfere with one another’s tracking even if the two bright spots do not overlap. Hint: If you are using the FIONA kernel, you may want to turn on the follow_jumps control; this will make the tracking slower, but will keep from getting lost if the particle moves too far in a single frame.

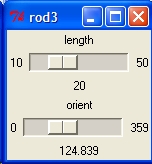

The rod3 kernel is a composite kernel. This is actually a grouping of three subkernels, each of which is one of the above types. These three kernels lie along the same line and move as a unit; they seek to track bars in the image. When this kernel box is selected, a new control panel will appear. This control panel is shown to the right, and it enables control over the length (in pixels) and orientation (in degrees) of the bar trackers. As with the radius and position controls described above, these controls change to display and control the values of the active tracker. The most successful bars may be made of cone kernels and be slightly less than the actual length of the bar on the screen. The symmetric kernel is probably the least useful. Note that if the bars flex, this tracker may not work very well.

The rod3 kernel is a composite kernel. This is actually a grouping of three subkernels, each of which is one of the above types. These three kernels lie along the same line and move as a unit; they seek to track bars in the image. When this kernel box is selected, a new control panel will appear. This control panel is shown to the right, and it enables control over the length (in pixels) and orientation (in degrees) of the bar trackers. As with the radius and position controls described above, these controls change to display and control the values of the active tracker. The most successful bars may be made of cone kernels and be slightly less than the actual length of the bar on the screen. The symmetric kernel is probably the least useful. Note that if the bars flex, this tracker may not work very well.

The image kernel is an image-based kernel that can be used to track particles that are neither radially symmetric nor rods. This kernel should be used on any object for which none of the other kernels are applicable. This image-based kernel begins by reading in an image from the first frame of the video at the specified location with width and height equal to twice the specified radius, and in each subsequent frame, it looks in X and Y for the position in the frame that best fits the initial image. The number of frames to average over can be set with a slider; the default value of 0 just takes the original image. Setting the value larger will let the tracker adjust as the shape of the object changes,but this will also cause the tracker to drift off the visual center of the object because there is nothing to stabilize it to the original object center. If the tracked object rotates, the imageor kernel is useful, as the orientation of the tracker can be set (similar to the rod3 kernel) before the initial reference image is set, and when tracking in subsequent frames, the tracker will take steps in orientation as well as in X and Y, recording orientation data for each frame as well as position. The radius should be set so that the tracker encompasses the whole object, which is most easily determined when the round_cursor box is left unchecked, as the line displayed is equal to the tracker’s width.

Enabling the trackers to follow jumps in bead position

The follow_jumps check-box activates a more-robust tracking algorithm that first looks for the best image match within 4 radii of the prior position and then performs the standard kernel match. This makes the tracking run more slowly, but is more robust to bead motion between frames. It does not affect the accuracy or the style of the main optimization for the kernel, it is only used to initialize the new search location between frames.

This following can be made faster (and slightly less reliable) by using estimated velocity to predict where the bead will be and reducing the search area. This is only true for beads whose motion is driven by a force; using velocity estimation on Brownian motion will produce worse results. Prediction is enabled by selecting the predict check-box. It is not recommended to use prediction without follow_jumps unless the motion is very linear. To get the tracker to reliably lock on, it is important to catch the bead when it is moving relatively slowly because the initial velocity estimate is zero velocity.

Activating the trackers

Once you have selected the type of tracker to use, and have selected spots to track, check the optimize checkbox. When you do this, the trackers should all move themselves to the centers of the spots they were started on. You can continue to add trackers using the right mouse button after you have checked the optimize button; they will try to follow the center of the spot even as you adjust their radius.

If you have saved a radially-averaged spread function for the spots you are tracking (using the CISMM Video Optimizer program), you can use it to estimate Z values for the spots by clicking the optimize_z button and then selecting the spread function’s TIF file in the dialog box that appears. The Z location will now be updated based on the spread-function stack.

Checking for lost trackers and Auto-finding beads

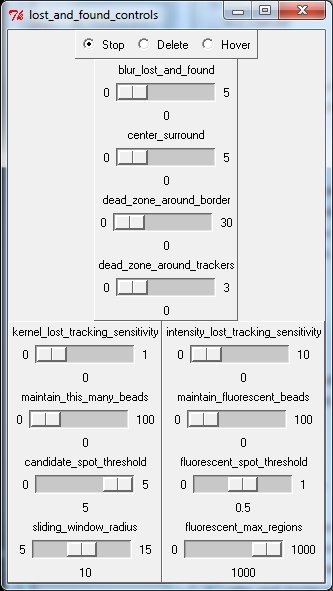

There is a show_lost_and_found button on the main control panel that brings up the control panel shown to the right.

Blur/Surround: There are two controls that affect how the image is processed before the lost or found processing is performed on it. These do not affect the actual tracking, but do affect how beads are located or discarded. If you turn on show_debug on the main control panel, the image will reflect the settings to help you determine effective ones. The window will also indicate where beads would be found given the current threshold and auto-find settings. The blur_lost_and_found slider controls the width in pixels of a Gaussian kernel that is convolved with the image to remove noise before the lost and found processing is done to it. A setting of zero disables this feature, and higher numbers blur away larger and larger features. The center_surround slider subtracts the surrounding value from each point (blurring with a kernel that is the sum of the two sliders), reducing the impact of background brightness level. This slider is useful in fluorescence videos where the background is non-uniform. It only takes effect if both blur_lost_and_found and center_surround are nonzero.

Lost: Two tracking sensitivity sliders located on this control panel set thresholds for detection of lost tracking. A threshold setting of zero disables each feature, and is the default. The kernel_lost_tracking_sensitivity is most often useful for brightfield microscopy video or reflected-light videos. Useful values for the threshold depends on the type of kernel you are using to track; this slider provides a normalized “how sensitive” control that should be approximately the same across types (the FIONA tracker uses the whole range 0-1, but the symmetric kernel needs only a very small number). If the threshold is exceeded by one or more beads while tracking, the associated bead is marked as lost.

The intensity_lost_tracking_sensitivity slider is most useful for fluorescence videos. Its value is independent of the kernel being used; it works based on how different the brightess at the center of the tracked bead is from the region surrounding the bead. In particular, it finds the mean and variance of pixel intensities at the edge of a box around the bead center that is 1.5 times as large as the bead diameter. It then compares the variance (squared difference from the mean) of the value at the center of the bead from the mean value of the border; if it is less than the variance times this sensitivity setting, then the bead is marked as lost. This parameter is particularly useful for fluorescence images with low backgrounds, but could also be used for dark beads on bright backgrounds.

Strayed: The dead_zone_around_border slider sets a boundary around the edge of the clipping region (see Faster display update section below for a description of clipping). If a bead gets within this many pixels of the border, it is marked as lost. The dead_zone_around_trackers slider marks beads as lost when they get too close to each other — their locations being within the specified radius of each other. When either of these sliders are set to 0, its dead-zone feature is disabled.

When a bead becomes lost for any of the above reasons, the result depends on the setting for the radio buttons:

- Stop: say “lost” and immediately stop going forward in the video until the problem is corrected. The tracker causing the problem is made active (red), so that you can find the troublemaker easily. The problem can be corrected by moving the tracker back onto the bead, or by reducing the sensitivity threshold so that the criterion is met. Once the threshold is met, the program may skip ahead a frame or two in video formats such as AVI whose player provides buffered playout. It will then pause. Pressing “Play” at this point will resume activity through the file.

- Delete: the offending tracker will disappear and a “pop” sound will be made to let you know it has gone. This is useful if you are tracking spots that go out of the window or when you have lots of beads and are looking for statistics because you can set the program running with a lot of beads tracking and then come back later without worrying about the program stopping or beads wandering around lost.

- Hover: the offending tracker will sit at the location where it last had a bead and keep looking for it again. It will not log while it is lost (there will be no entry during those frames in the logging file). This mode was made to enable tracking of blinking spots with the FIONA kernel.

Found: There are also two independent tracker-finding mechanisms, one for brightfield and one for fluorescence. Brightfield uses the maintain_this_many_beads slider, if set to above zero, will attempt to automatically place new trackers whenever there are fewer than the number requested. It places them in the locations on the screen that have the best matches for the present kernel settings, and it creates them with the present kernel settings. The trackers will not be placed within one radius of the edge of the screen or within two radii of existing trackers. The candidate_spot_threshold can be used to filter out spots that are not likely to be beads; the higher the setting, the more discriminating the test. The lost_tracking_sensitivity also controls where trackers will be initialized; they will not appear where they would be immediately lost.

The maintain_fluorescent_beads slider, if set above zero, will attempt to automatically place new trackers based on the fluorescent_spot_threshold setting. The threshold is expressed as a fraction of the total intensity in the frame; the darkest pixel is mapped to 0 and the brightest to 1. Any region of the image that is above the threshold is considered to be a candidate for a bead. The fluorescent_max_regions controls how many different regions of the image will be checked to see if a tracker should go there — for very noisy images, there can be a huge number of regions and this can cause the program to run very slowly or crash. Reducing this number will make the autofind faster, but may miss spots. The intensity_lost_tracking_sensitivity also controls where trackers will be initialized; they will not appear where they would be immediately lost.

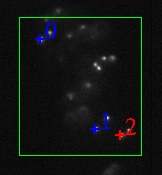

Faster display update

Once you have created all of the trackers you want, you may check the small_area checkbox. This will cause the program to limit its update to a small area of pixels that surrounds the active trackers. This requires less processing and makes the update rate faster when the video is played. For the Roper and SPOT cameras, it also reduces the amount of data transmitted from the camera itself, again increasing the frame rate. This area will update itself as the trackers move, and it extends 4 radii past each tracker. This should enable the trackers to continue to track spots that are moving away from the updated video area. A green box shows up in the video window to indicate the area being updated.

Once you have created all of the trackers you want, you may check the small_area checkbox. This will cause the program to limit its update to a small area of pixels that surrounds the active trackers. This requires less processing and makes the update rate faster when the video is played. For the Roper and SPOT cameras, it also reduces the amount of data transmitted from the camera itself, again increasing the frame rate. This area will update itself as the trackers move, and it extends 4 radii past each tracker. This should enable the trackers to continue to track spots that are moving away from the updated video area. A green box shows up in the video window to indicate the area being updated.

You can also manually adjust the area that is updated using the minX, maxX, minY, and maxY sliders that appear when the show_clipping check box is on. If you do this, you will want to un-check the small_area setting.

To turn off the small area tracking, and to undo the selection of a manual region, click the full_area checkbox once. It will reset the update area to cover the whole image and turn small-area tracking off.

Reading the position of a tracker

You can check the position of each tracker in turn by clicking on each with the right mouse button, which makes each the active tracker that shows its values in the x, y, and radius displays. Note that these may show subpixel positions and radii.

Performance adjustments

You can control the minimum step size that the optimizer will use by adjusting the precision slider within the tk control panel. Smaller values make the program run more slowly but will result in the program attempting to find the position with greater precision (this will not necessarily correspond to greater accuracy, due to noise and possibly aliasing).

You can control the minimum step size that the optimizer will use by adjusting the precision slider within the tk control panel. Smaller values make the program run more slowly but will result in the program attempting to find the position with greater precision (this will not necessarily correspond to greater accuracy, due to noise and possibly aliasing).

You can also adjust the number of samples made to determine the fit at each spot location. The sample_spacing slider controls both the number of circles around each point that are sampled and the number of samples around each circle. The default spacing of one pixels causes a pixel-sized step to be taken between the center and each radial distance, and also a single-pixel step to be taken around each circle. The speed of calculation varies as the inverse square of the pixel spacing. Note that the samples are not taken at pixel centers, but are interpolated based on the tracker center. Also, the pixel starting location is staggered for each new circle (moving a half pixel further around) to prevent sampling preferentially along the horizontal axis. the image shown here is for the symmetric tracker, which does not sample at the center; the cone and disc trackers have a single sample in the center.

Recording the tracker motion (and video)

You can record the motion of the trackers by checking the Logging checkbox within the log control panel (shown to the right). This panel was moved inside the kernel control panel in version 04.07. This will bring up a dialog box that will let you select a filename. It will automatically add the default extension “.vrpn” to the filename you create. (A second file, with the extension “.csv” will also be created, though the program does not mention it.) Once you have selected a file name, you check the play_video checkbox to begin going through the video file, or you can use the single_step_video repeatedly to step through a frame at a time. When you are finished with the section of tracking that you are interested in, uncheck the Logging checkbox to stop the logging. You can then go forward to another section, turn on logging, and save a different file if you like.

You can record the motion of the trackers by checking the Logging checkbox within the log control panel (shown to the right). This panel was moved inside the kernel control panel in version 04.07. This will bring up a dialog box that will let you select a filename. It will automatically add the default extension “.vrpn” to the filename you create. (A second file, with the extension “.csv” will also be created, though the program does not mention it.) Once you have selected a file name, you check the play_video checkbox to begin going through the video file, or you can use the single_step_video repeatedly to step through a frame at a time. When you are finished with the section of tracking that you are interested in, uncheck the Logging checkbox to stop the logging. You can then go forward to another section, turn on logging, and save a different file if you like.

At the time logging is started, the value of the Relative to active tracker start check box is used to determine the origin of the reported positions (orientation and radius are always absolute). If the box is unchecked, the positions are in pixels with respect to the center of the pixel in the upper-left corner of the screen as the origin. If the box is checked, the positions are in pixels with respect to the location of the active tracker when logging was turned on.

Logging will not occur while the file is paused, or when the end of the video has been reached (this also means that the positions of the trackers in the last frame of video will not be stored). This is done so that you can place the trackers back onto spots that have jumped too far between frames when single-stepping through the video and still get an accurate record of where the spots went. There will be one entry in the file per tracker for each frame of video stepped through or played through (unless optimization is turned off during that frame and the Log when not optimizing checkbox is not checked). The time values associated with each record are the wall-clock time at which the particular frame left the screen (when the next frame appeared); this is probably not useful and so should be ignored. Note: Some video files have a 30 frame/second playback but have three copies of the same video frame to produce an overall update of 10 frames/second; this will produce repeated location reports for each of the identical frames.

The motion of the trackers over time is stored into two log files. The first is a Comma-Separated Values format with the extension .csv. This text format can be viewed in a text editor or loaded by several spreadsheet and analysis programs. The reports are stored in the file in in frame order, with multiple tracker entries interleaved for each frame. If you want to view the individual traces, you should use the Excel data sorting tool to sort the list by the Spot ID column.

The second log file is in the Virtual-Reality Peripheral Network (VRPN) format. This format can be converted into a Matlab data file using the vrpnLogToMatlab program that is also available for download from the CISMM software download page. You convert the “.vrpn” file into a Matlab file by dragging it onto a shortcut that points to the program (or by dragging it onto the program itself).

Data description: Positions and radii are reported in units of pixels, with the origin at the upper-right corner of the screen. +X is towards the right on the displayed window and +Y is towards the bottom.

Orientation assumes square pixels and is reported in degrees where zero degrees has the rod aligned with the X axis, 45 degrees when it is along +X, -Y (slanting to the upper-right on the display), and 90 degrees when aligned with Y.

When the FIONA tracking kernel is used, the radius field refers to the standard deviation of the Gaussian that is being fit. The Fit Background field refers to the pixel-intensity count of the background value added to the Gaussian at the best fit. The Gaussian Summed Value field refers to the volume under the Gaussian (in units of square pixels times intensity units). The Mean Background field reports the mean of the pixels that are 5 pixels from the Gaussian center. The Summed Value field is the sum of the pixels within a 5-pixel radius around the center of the Gaussian with the mean background subtracted from each.

If the video checkbox is selected, Spot Tracker will also store a vrpn_Imager copy of the video data on a device named TestImage. The first frame of the video and every 400th frame of the video will be stored as the entire frames; intermediate frames will only have video in them for regions around the existing trackers (twice the radius of each). This provides a way to store a much smaller video that records all of the motion at and near beads in case they need to be re-tracked later with different parameters. It also provides a pass-through mechanism for another program to connect to a live running Spot Tracker program and watch both the (subset) video and the bead motion.

Controlling playback

Whether logging is turned on or not, the video can be played by checking the play_video checkbox, and paused by unchecking it. The single_step_video checkbox will step forwards one video frame each time it is pressed. The rewind checkbox causes the video to rewind to the beginning, play the first frame of the video, and then pause. The FrameNum display shows which frame of a video file is currently being displayed.

Background removal

When the background_subtract checkbox is selected, the program will compute a running average of the video frame and subtract the current frame from the running average. After 50 frames have been added, no more are used to form the average. This is intended to help separate moving objects from a stationery background that confuses the tracking.

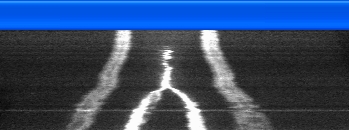

Kymograph function

Clicking on the kymograph button in the main control panel brings up two more windows and modifies the behavior of the first four tracked spots in the image. This function is aimed at the study of cellular mitosis, and it provides a “spingle-centered” trace through the image. The two centromeres define the ends of the spindle. The point halfway between the two centromeres is defined as the spindle center, which moves from frame to frame. The line through the two centromeres is defined as the spindle axis, which rotates from frame to frame.

When kymograph mode is enabled, the third and fourth points (the ones that would normally be labeled “2” and “3”) are relabeled “P” and “A” for posterior and anterior. These two points are not optimized each frame no matter what setting the kernel optimization checkbox has, as they are used to label the ends of the cell. A green line bisects the image halfway between these points, indicating the 0 value on the cell-centered axis. Negative values (to the left on the kymograph_center chart) are towards the posterior end; positive towards the anterior. The white line down the center indicates the cell center; dim lines indicate 10-pixel steps, and brighter lines indicate 100-pixel steps away from the center.

It is important not to delete any trackers in kymograph mode. This will prevent the A and P points from behaving properly, and will also prevent proper following of the centerline.

The first window is named kymograph and it will accumulate traces from the image through the first two tracked points (the points labeled “0” and “1”). If there are not at least two tracked points, nothing is shown in this window. As the video is stepped through or played, a new line will be added to the window that is a resampling of the image pixels. The center of this window on each line corresponds to the spindle center and the sampling is done along the spindle axis. A green line is drawn between points 0 and 1 to indicate the spindle axis, and a green cross-line indicates the spindle center.

The first window is named kymograph and it will accumulate traces from the image through the first two tracked points (the points labeled “0” and “1”). If there are not at least two tracked points, nothing is shown in this window. As the video is stepped through or played, a new line will be added to the window that is a resampling of the image pixels. The center of this window on each line corresponds to the spindle center and the sampling is done along the spindle axis. A green line is drawn between points 0 and 1 to indicate the spindle axis, and a green cross-line indicates the spindle center.

The second window is named kymograph_center, and it shows how the spindle center moves within the cell. If there are not at least four tracked points, nothing is shown in this window.

The second window is named kymograph_center, and it shows how the spindle center moves within the cell. If there are not at least four tracked points, nothing is shown in this window.

Performance measures

The speed and accuracy of the video spot tracker has been tested against a number of generated data sets, each of which has a known contrast and noise value. The results of these tests (and the images themselves) can be found at http://www.cs.unc.edu/~taylorr/vst_tests.

Version Information

Version 8.01:

- Fixed bugs in the brightness of the displayed images for 16-bit images when texture video is being used. Adjusted the brightness to scale around the middle value when background subtraction is turned on. None of these affect tracking performance.

- Added a slider to allow image averaging for the image kernel, in addition to the one for the imageor kernel. Note that setting this larger than one can cause drift away from the visual center of the object because there is nothing stabilizing the kernel.

- The image and imageor trackers now rebuild when the tracker radius is adjusted.

Version 8.00:

- Added two new image-based tracking kernels, one of which follows asymmetric objects that rotate and one of which follows asymmetric objects that do not rotate.

- Added a feature to enable autofinding only in the first frame of a video, then not afterwards.

- Fixed an out-of-memory bug when lots of beads are being found using autofind but video was not being passed through — the lost trackers needed for video storage were being accumulated but never deleted in that case.

- Fixed two bugs with background subtraction that were causing the preview window to display the information improperly. The code for tracking saw the correct images, and things worked if texture video was off, but the display in the normal case was rescaled in space and intensity.

- Spot tracker now pre-fetches frames when reading from the image stack reader. This helps tracking throughput.

- Fixed a bug where storing video sometimes lost frames due to a VRPN pong message arriving at the server and causing it to delete the non-filled-in parts of the video.

- Fixed crashes on exit when no file was selected in the opening dialog.

- Modified autodelete behavior to leave the highest-numbered remaining particle as the selected one, to match manual-deletion behavior.

- Hides the console window on the mac and cleaned up version string and exit behavior when it notices a problem.

- Fixed crash bug when loading TIFF files on the mac.

Version 7.07:

- Added CUDA acceleration to the lost-tracking detection for brightfield tracking with symmetric trackers. This speeds up the tracking considerably when you are looking for lost trackers.

- Fixed a bug in lost-tracking code where the wrong equation was being used to determine how many samples to do around a circle. The bug seems to have been introduced after version 07.03. If you’re using lost-tracking detection, you’ll want to upgrade. It will be slower than the old version (if not using CUDA), but it will be testing all directions rather than only a few of them.

- Fixed a bug with how prediction was implemented.

- Fixed several bugs with storing compressed video while doing tracking. Picking and moving trackers now works correctly. The whole region around the motion of a bead is now wtored, rather than just a region around the final position. Now stores an entire frame whenever a bead becomes lost, so the replay will still find that it is lost. Fixed replay from stored files so that it does not skip the first frame.

Version 7.05:

- Fixed a bug where the brightfield autofind code was not using the brightfield lost detection, making it not find all of the beads it should have found. This bug was probably introduced in version 07.00.

- Fixed several bugs in the VRPN-file logging. This includes perhaps a large number of lost frames of data at the beginning of a log, and one frame of lost data at the end of the log. It also includes storing the video frames off-by-one. These bugs were probably introduced in version 6.00.

Version 7.04:

- Fixed a bug where the autofind code was not using the parameters set in the GUI or on the command line. This bug was probably introduced in version 07.00.

- Added center-surround feature from 07.03 into the state file and provided command-line argument to specify this.

Version 7.03:

- Added feature to subtract the local background illumination from the scene before running lost and found processing. This helps remove the impact of non-uniform background illumination when selecting a threshold for finding beads in fluorescence images.

- Fixed a bug in the CUDA version where turning on tracker optimization stopped the blurring kernel from working.

- Fixed a crash bug if you ran vrpn_spot_tracker_nogui without giving it a file name to track in.

- Rearranged the lost_and_found GUI to make it clear which settings to use for fluorescence and for brightfield.

Version 7.02:

- Fixed a bug with the radius of auto-find trackers not being set correctly.

Version 7.01:

- Installs both CUDA and non-CUDA versions of the software. The CUDA version requires an nVidia driver that was updated in May 2012 or later to run.

- Fixed a bug where the program would crash during exit if you did not select a file to open.

Version 7.00:

- Fixed a bug introduced in 6.10 that caused the spacing and precision to both be 0.25 for new trackers no matter what the GUI settings were.

- Fixed a long-standing bug where the spacing around the circle for symmetric kernels was always 1.0 no matter what the GUI settings were.

- Fixed a bug for blurring during autofind introduced in 6.10 that caused the left and top edge to blur incorrectly.

- The symmetric tracker will now use CUDA to have the graphics card do the tracking calculations so long as the requested precision is greater than 1/256 pixel (the bilinear interpolator in the texture reads can only go to 512 discrete positions between pixels). This speeds up tracking.

Version 6.11:

- Fixed a bug that kept it from being able to open some video files.

- Tweaked GUI layout so the windows don’t overlap on operating systems with particularly wide borders.

Version 6.10:

- Re-implemented the ability to open video files. The new implementation is in FFMPEG, which is a cross-platform solution. The older, DirectShow, method did not work on Windows 7 because of changes in the API structures.

Version 6.09:

- Fixes crash-on-exit for Window XP.

Version 6.08:

- Avoids memory increase with fluorescence autofind (keeps it from crashing).

- Fixes memory leak when loading multi-layer TIFF files.

Version 6.07:

- Flourescence autofind ignores the image in the dead zone when computing the maximum and minimum values to use.

Version 6.06:

- Added the ability to read VRPN Imager files (had accidentally gone away with the CMake build in version 06.05).

Version 6.05:

- Added a control to limit the number of connected components that will be checked during fluorescence autofind. This prevents the program from using up all of memory and crashing when trying to place additional trackers in a noisy image.

- BUG: This version cannot open DirectShow video files or devices. Microsoft changed the interface and this version has not been modified to use the new one. Previous versions will also not be able to open DirectShow files on the latest version of DirectX (the one found on Windows 7, for example) due to this interface change.

Version 6.04:

- Added an intensity-based fluorescence auto-find routine, including a user interface, command-line arguments, and saved-state code. It works in parallel with the existing auto-find code (both or either can be used).

Version 6.03:

- Fixed a bug that kept it from writing a VRPN-format output file when it was asked to log at the command line.

- Fixed a bug that kept auto-finding from work with non-symmetric kernels (in particular, it is known to have failed with the cone kernel).

- Adds a command-line argument to make it restart tracking using the last tracking positions found in a stored CSV file from a previous run. This enables chaining tracking runs across multiple video files.

Version 6.02:

- Made it so that the program can load arbitrarily-sized 8-bit, single-channel raw files (some preliminary work has been done towards either multi-channel or 16-bit files, but the UI and the raw reader still need to be modified). This generalizes the Pulnix raw-file reading to also work with Point Grey and other cameras. The program tries to determine the frame size automatically (checks for 648×484 and 1024×768 with 112-byte padding), and if it cannot find one then it throws a dialog box asking for the size. There is a command-line argument that can be used to specify the size (avoiding the dialog box and enabling override of the guessed size if needed).

- Adds the ability to read series of PPM file and PGM files.

Version 6.01:

- Updated the installer to use an external manifest and to remove an un-needed dependency that made the program fail to run on some machines.

Version 6.00:

- Added video check-box to the logging control that enables logging of vrpn_Imager data to the VRPN file (and to any attached client). It stores complete frames every 400th frame, and in between sends video only around the current trackers (twice their radius). This provides a way to forward and to store that subset of the video relevant to the experiment.

Version 5.29:

- Added Save State and Load State buttons that store the current kernel, lost-and-found, exposure, and precision settings to a file so that if you have a favorite setup, you can keep them for running on other files. Also added a “-load_state” command-line option so that you can use this with a script. As a special hidden bonus, you should know that each line from the state file is passed as-is to the Tcl interpreter, so this could potentially be used to script things besides state.

- Added a -nogui command-line parameter that turns off the video display window and enables tracking at greater than the frame-refresh rate.

Version 5.28:

- Added an -intensity_lost_sensitivity command-line parameter so that batch files can use the features included in 5.27.

Version 5.27:

- Added an intensity-based lost-bead detection setting that compares the value at the center of a bead with the mean of the pixels surrounding the bead. If the squared difference is less than the scaled variance, then the bead is not distinguishable from background and is marked as lost.

- Changed the cone kernel so that its weights go from 1 at the center to 0 at the specified radius. Before, it had gone to -1 at the edge, which caused it to accentuate the importance of the background and wander off beads.

Version 5.26:

- Fixed a bug where deleting a tracker would sometimes change the radius of the new active tracker.

- Made the image-based tracker used in follow jumps mode reduce its radius rather than give up when it is close to the edge of the image.

- Made the follow_jumps check radius 4 times the bead radius.

- Modified the symmetric kernel so that it does not return NaN values when it is on the image edge.

Version 5.25:

- Fixed memory leak that was causing the program to crash after running for a long time with auto-find turned on for symmetric trackers.

- Removed the upper limit on number of trackable beads.

Version 5.24:

- Added slider control for the SMD local neighborhood radius to the lost and found control panel.

- Added command-line options for all lost and found functions, and for the default tracker radius, to the command-line options. This makes it easier to set up a shortcut to automatically run through and save all data for a particular video file, enabling hands-free offline processing of entire files once you know the best auto-find parameter settings.

Version 5.23:

- Added an optional dead band around the edge of the selection area so that trackers going into this area are marked as being lost.

- Added an optional dead band around each tracker so that trackers that get too close together will have all but one marked as being lost.

Version 5.22:

- Added Ryan Schubert’s auto-bead-finding code that locates new beads in an image in an attempt to maintain a minimum number of active beads.

Version 5.21:

- Added maintain_this_many_beads control to the lost_and_found control panel to automatically find beads within the image when there are too few remaining.

- Made the cone kernel penalize bright patches around the outside, rather than just not weighting them as having any value.

Version 5.20:

- Fixed a bug where it could miss deleting a lost tracker if more than one were marked for deletion in the same frame.

Version 5.19:

- Fixed a crash bug when the last tracker is autodeleted by the “lost and found” code.

Version 5.18:

- Added reporting of actual mean background value and summed value near FIONA tracker output (the previous version reported the best-fit Gaussian’s values).

Version 5.17:

- Added reporting of background value and summed value for FIONA tracker output.

Version 5.16:

- Fixed bug that kept the program from opening large SPE-format files (taken with Roper cameras).

- Fixed bug that made the FIONA kernel somewhat averse to crossing pixel boundaries. Thanks to Yuval Ebstein for pointing this out and sending a data set to debug it on.

Version 5.15:

- Fixed bug that caused a crash when it was started from the command-line with a FIONA tracker.

- Added -FIONA_background command-line argument to set the default.

Version 5.14:

- Reworked the “lost tracking” interface to be on a separate control panel that comes up when show lost and found is selected on the main GUI.

- Added “Hover” option to the lost tracking choices, where the tracker will sit at the same location without recording its location to file until it is once again not lost. This makes it possible to track beads that blink out for a brief period and then reappear.

- Changed the behavior of the FIONA kernel so that it will not go negative unless the invert button is pressed on the kernel controls. Also modified its lost-tracking sensitivity so that it works well with the range 0-1 on the slider.

Version 5.13:

- Added option to not log when optimization is turned off for a frame, so that people can skip frames where a single-fluorophore bead has blinked off.

- Canceling the “Logging” dialog now clears the logging checkbox.

- Cancelling the “Optimize in Z” dialog no longer crashes the program.

- It is now possible to load PNG files directly by dragging them onto the spot-tracker program without having to first convert them to TIFF files (note; these are often compressed and so should not normally be used).

Version 5.11:

- Added “autodelete when lost” checkbox that will delete a tracker when it exceeds the lost-tracking sensitivity level. This lets people put a lot of trackers going and then not have to stop every time one gets lost.

Version 5.10:

- Added support for multi-layer TIFF files. The previous versions would only show the first frame from each file. This version will step through each frame in each file.

Version 5.09:

- Removed bias for +X and +Y directions, larger radius, larger orientation, and larger Z from the optimization routines. Before, it would always test those directions first and go there if it got better. Testing involving noise images revealed that this adds a bias. It now looks in both directions and selects the best out of the +, -, and no-step values as the new location.

- Added command-line arguments to facilitate using the program as part of a script. This was done to enable automatic testing of the program, but it could also be used to have the program automatically start, track, and stop when finished. Run as “video_spot_tracker.exe -help” to see a description of the arguments.

Version 5.07:

- Modified the FIONA code so that it uses a point-sampled Gaussian when fitting, and so that it optimizes the radius and background and volume as well as the position. This makes it match the Selvin lab’s code more closely.

- Added command-line arguments to let you run the program from within a script and have it track, log, and quit without user intervention. It still shows the interface on the screen so you can see what happens.

Version 5.06:

- Made it so that you can resize the tracker window and have the mouse clicks still line up with the video data when using OpenGL for viewing. (OpenGL viewing is currently the default as long as the hardware supports it). This lets you “zoom in” by making the window larger on the screen, or shrink too-large windows to fit on your screen.

- Fixed a bug that kept recent versions from tracking on any but the red channel of video.

- Fixed a bug that made red and blue be inverted for DirectX cameras and all video files when using color cameras.

Version 5.05:

- Fixed another bug in the SEM file replay. It should happen fast now.

Version 5.04:

- Fixed bug in SEM file replay.

Version 5.03:

- Removed control for FIONA, which is not yet fully debugged.

Version 5.02:

- Made the tracking multi-threaded, so that all available processors will be used to track. For cases where there are more than one tracker active, this should make the program faster (up to a maximum frame rate that matches the display frame rate).

Version 5.01:

- Raised the maximum number of tracked particles in the VRPN logfile from 100 to 500.

Version 5.00:

- Adds OpenGL-accelerated transfer of the video to the display using texture memory. This makes the display much faster and leaves the CPU free to do tracking.

- Replaced the bit_depth slider with a brighten slider to make the internal code more consistent. This will have no effect on non-OpenGL-accelerated 8-bit images but will still function for 12- and 16-bit cameras and for loaded image stacks (which internally use a 16-bit format even if they are 8-bit images).

- Fixed a bug that prevented display of UNC SEM image files.

Version 4.08:

- Adds a Cooke camera driver.

- Fixes the documentation to include the image of the spots in a circle.

Version 4.07:

- Removes VRPNLogToMatlab installer; it is now available as a separate package with its own version number.

- Rearranged the GUI elements to keep from making the left panel too long. Consolidated the logging control panel into the kernel control panel.

- Made round cursors the default, now that we have such nice ones. 🙂

- Turns off tracking and deletes traces when rewind is pushed. This was requested by a user to be the behavior, and it certainly seems likely to be the common case.

- Made the “round cursor” option for the rod3 tracker display something useful. In this case, a rectangle with lines coming in.

Version 4.06:

- Adds visible traces of logged positions that show up on the screen.

Version 4.05:

- Adds “Thank-you Ware” button to the program.

Version 4.04:

- Includes background_subtract feature that will subtract up to 50 frames of averaged background data.

- Displays the video much faster.

- Switches the RGB slider to a radiobutton.

Version 4.03:

- No longer requires separate installation of ImageMagick libraries.

Version 4.02:

- Fixes bug in 04.01 where the tracker locations would be logged a bunch of times at the same frame number when one of the trackers had lost tracking.

Version 4.01:

- Adds heuristic to determine when tracking is not being robust, and a slider to select a threshold value. When the threshold is exceeded, the program says “lost” and stops playback of the video until the problem is corrected. This is intended to be used to enable eyes-free use of the program without having the trackers go wandering off to nowhere while you’re not looking. It also provides frame-accurate stopping, so that even with video file formats like AVI that have a buffer of frames, it stops right at the frame where the problem is. The heuristics for symmetric-kernel tracking are optimized more than those for the disc and cone kernels; no telling if they work very well.

- Big, fat “Quit” button added in place of wimpy little check-box that easily got lost in the noisy interface.

Version 4.00:

- Modified the output format for both the CSV and VRPN files to include the frame number. In the CSV file, the frame number replaces the (bogus) time value. In the VRPN file, it is provided as an additional vrpn_Analog report. This report is properly converted by version 2.0 and later of the VRPNLogToMatlab program (ships with Video Spot Tracker); earlier versions will silently ignore the extra data.

- Note that the output format of the VRPNLogToMatlab program included here is not compatible with the output of the earlier versions. It changes the name of the matrix and includes different data.

Version 3.07c:

- Adds test of control for EDT board (which we use to control the Pulnix camera).

Version 3.07:

- Can open and track in Roper SPE stack files.

- Removed harmless error message printed to console window when no Z-track file loaded.

Version 3.06:

- Does not require tracking to be on in order to draw the kymograph.

- Says “Done with the video” and pauses when it gets to the end.

Version 3.05:

- 3D bead tracking based on averaged spread functions created using the CISMM Video Optimizer tool. This lets the depth of each bead be estimated each frame.

Version 3.04:

- The “follow jumps” code in this version is much faster than in prior versions. It only looks at the vertical and horizontal lines through the spot, rather than at all parts of the image. This seems to be nearly as robust as the earlier method.

- This version adds a “predict” option that enables it to use velocity information to reduce the number of times the image match must be done. When used in conjunction with “follow jumps” it makes tracking even faster for beads on beating cilia.

- This version saves the last tracked position (the current one when the program quits or when the log file is closed). This means that it saves positions for all of the frames, from the first through the final, for a video sequence. Earlier versions lost either the first or the last frame. This was important for “videos” with small frame counts.

Version 3.03:

- It is now possible to track up to 100 spots in one image. Earlier versions could only track up to 20 spots (but they would not report this condition — logging simply didn’t happen for spots numbered 20 and up). The new version won’t let you create more trackers than can be logged.

- When the kernel type is changed, this version does not renumber the trackers. Version 3.02 did renumber them, resulting in an ever-increasing base number as the type was changed over time.

Version 3.02:

- When trackers are deleted, their indices are no longer re-used. Before, the deletion of bead 1 would shift the indices of all the other beads (on the screen and in the log file) greater than one. Also, the creation of a new bead after one was deleted used to re-use its index. Now, tracker indices remain the same so long as the tracker is present and are not re-used when trackers are deleted and created.

- Code was added to enable the trackers to follow jumps of up to about 2 bead radii between frames. This mode retains the same accuracy as non-jump-following code, but operates much more slowly. This is controlled by a check-box in the kernel control panel.

- Bug fixes to support both 8-bit and 16-bit input files.

Version 3.00:

- Major GUI changes: The behavior of the mouse buttons is completely different from their earlier behavior. The left mouse button now creates new trackers, and the right mouse button drags existing trackers around without changing their radius. There is not a tracker created at program startup. The “delete” and “backspace” keys can be used to delete the active tracker.

- Major output changes: The Y values are now reported with respect to the center of the pixel at the upper-left corner of the screen. Previous versions reported with respect to the center of the pixel at the lower-left corner. The new convention is in keeping with the output from the MetaMorph program. Also, image from Pulnix cameras read through the EDT device are inverted to make them match the images shown by the preview program that comes with the camera. It is now possible to save positions relative to the initial location of the active tracker when logging is started.

- Various bugs were fixed that caused the program to crash, use truncated filenames for logging, fail to open some types of files, or display incorrect intensities in the display window. The precision of position output has also been increased by decoupling it from the precision shown in the GUI.

Version 2.03b:

- Only the web page and installer have changed. They mention that you need to have the 16-bit version of ImageMagick 5.5.7 to load image stacks.

Version 2.03:

- Is able to load a series of TIFF (or .BMP, or other image file format) files and play through them sequentially as if they were a movie. The files must have sequentially-numbered names (there can be gaps between the numbers, but the gap must be consistent between each pair of file names). The files can be 8 bits or 16 bits (or 12 bits in 16, which is a common format for TIFF files exported by camera-control software).

- Will exit cleanly when one of the initially-displayed windows is closed by clicking on its (X) in the corner. If the main control panel is closed, it spits out a bunch of messages about not being able to destroy objects; these are harmless, indicating only that we are trying to get rid of things that are already gotten rid of.

- Fixes a bug where trying to rewind or quit the program while the video was playing on a directx video file could cause the program to hang.

Version 2.02:

- Will load in UNC’s optical fluorescence files that are in SEM format more rapidly than earlier versions. It also doesn’t keep them in memory, which will enable loading larger files.

Version 2.01:

- When the program is run with no arguments (or by double-clicking the icon on the desktop), it asks the user for a video file to track in.

Version 2.00:

- Fixed a bug in the logfile-creation code that caused it to crash sometimes.

- Changed major version number because 01.29 seemed like it was getting out of hand.

- Use this in preference to 01.26 through 01.28a, which all have the potential crash bug.

Version 01.28a:

- Updated the manual. Same program as 01.28.

Version 01.28:

- Improved kymograph now shows a trace of the spindle center with respect to the cell center.

Version 01.27:

- Added initial version of kymograph (misnamed kinograph) that records traces along the centerline between two spots. This is intended for use in cellular mitosis by placing the first two spots to be tracked on the centrosomes.

Version 01.26:

- Added the output of .csv log files in addition to the .vrpn log files. These are in a text format that can be read by several spreadsheet and analysis programs.

- Added support for a group of three kernels in a line that will track bars in the image